Apple Intelligence

One of my short articles published in my blog last year (link), I wrote about the interface learning, awareness and relationship, which is the effort of trying to explore the possibilities and potential of how a virtual smartphone assistant could be helping us daily in our lives.

This year after getting into more nuances of ChatGPT and amazing tools alike, a new horizon seems not just stop there, especially when I saw Apple’s WWDC 2024 early this year. Before we dive in, let’s take a look of WWDC 2024 below to have a quick peek.

Apple WWDC 2024

The thing that is most exciting to me is the announcement of Apple Intelligence (start from 01:04:30), which is impressively coupled with the idea that I was imagining for years.

If you ask me what smartphone “AI” truly is, I definitely won’t vote for auto-completion, voice or image search, or magical eraser tool in my camera functions. Don’t get me wrong. Those are absolutely powerful features, but just not the ones that I’ll label them as what strong AI is capable of.

AI has tons of scopes and applications that cover from multi-media like text, image and voice, and many others. LLMs might be just tiny part of the whole AI magics, and the ChatGPT that I engage with for the last couple of months, guide me toward the power of how LLMs could be further leveraged to make our smartphone truly smart. In this WWDC ‘24 video, let’s recap on how Apple is picturing the future of AI embodied in the Siri, a voice-controlled artificial assistant built in the iPhones.

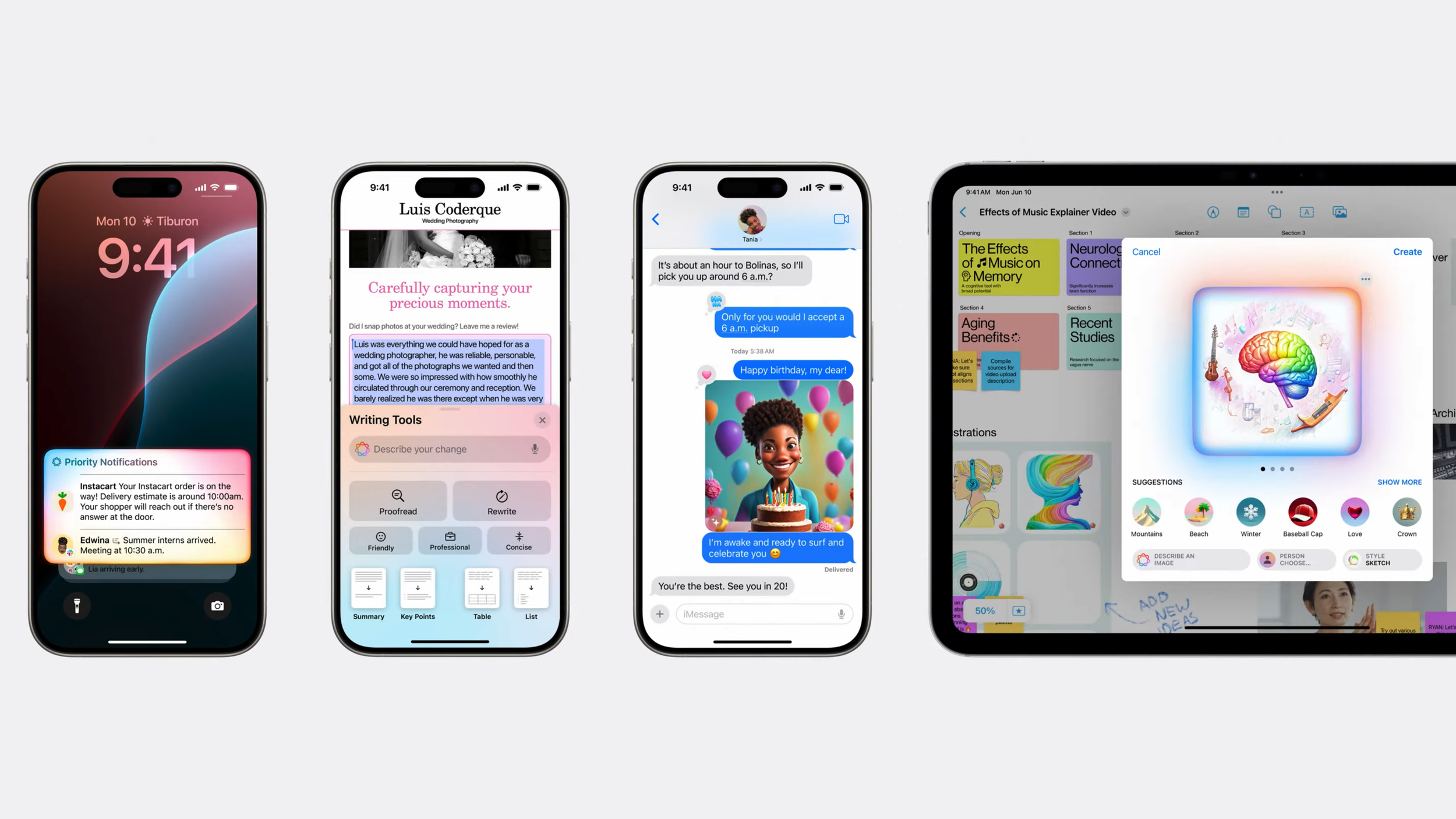

The five principles of AI that Apple advocates are Powerful, Intuitive, Integrated, Personal, and Private. So the first couple of capabilities that Apple shows off are around language and image, such as priority notifications, writing tools, personalization and unique styles.

Source: WWDC 2024

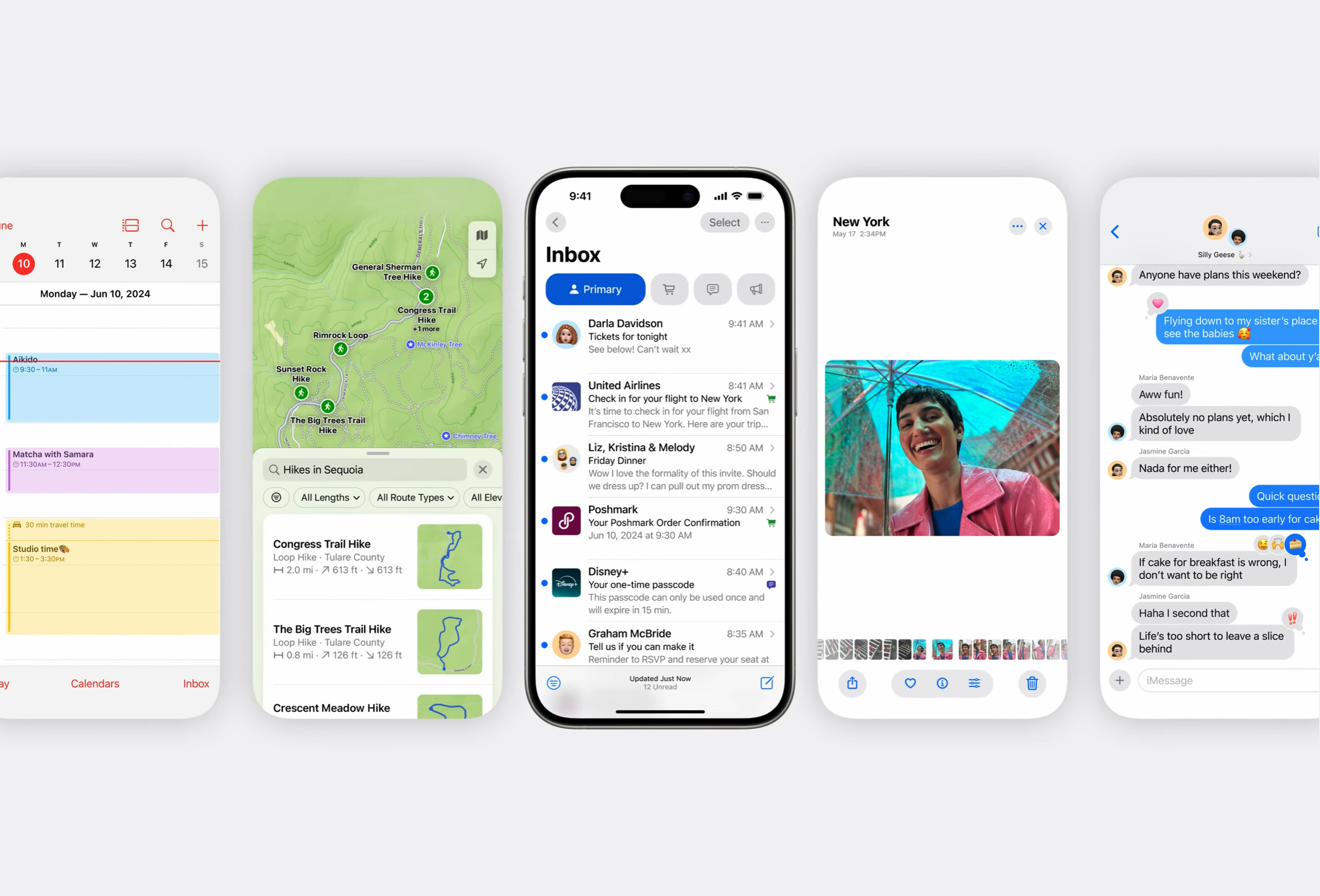

So far Apple not yet shows the tip of its AI untill Craig mentions that the AI can retrieve and analyze the most relevant data from across the apps, and to reference the content, like in Email or Calendar. Magic wand starts to work its magic.

Source: WWDC 2024

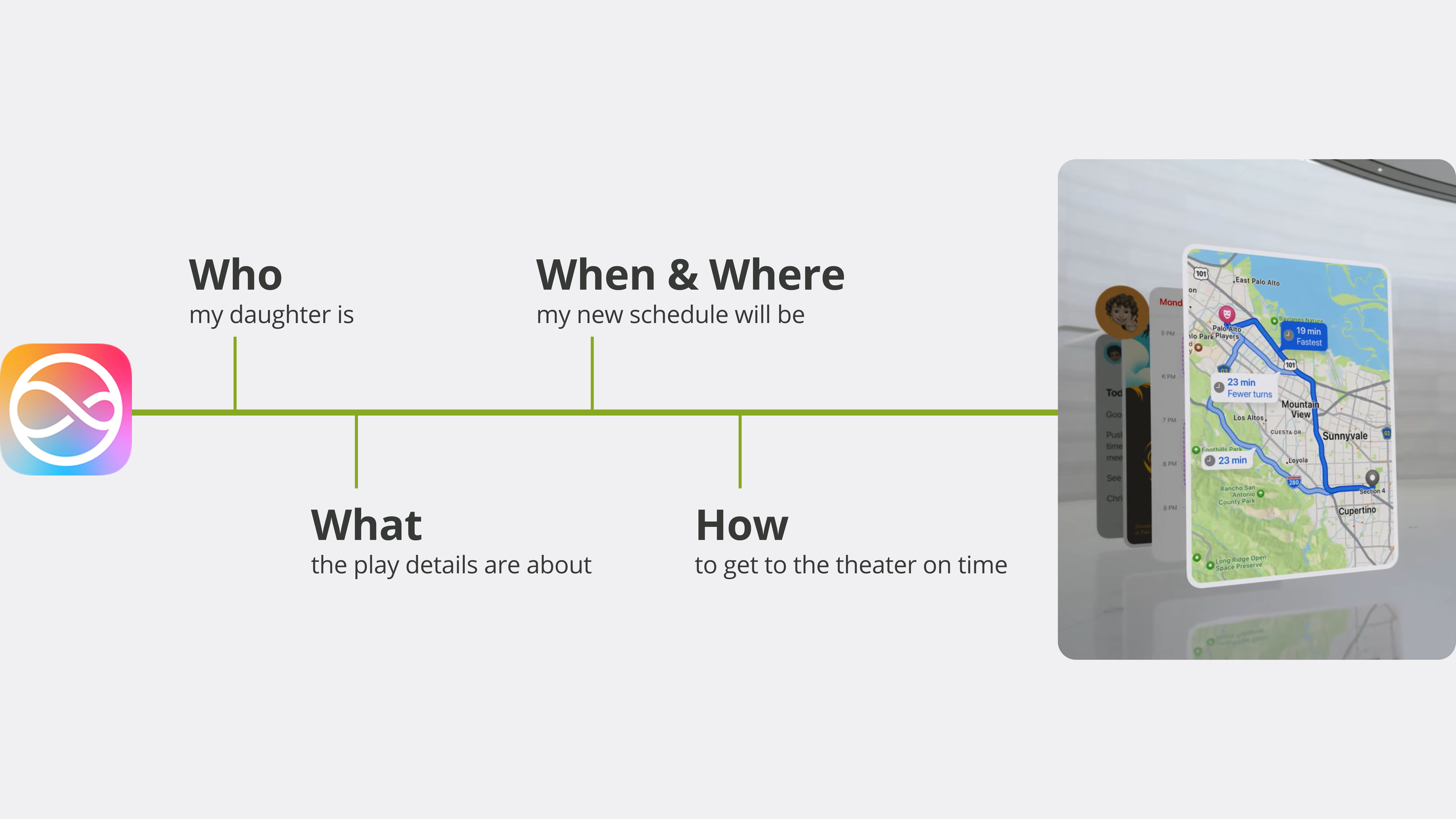

According to the demo, it shows that the user got noticed of one meeting being re-scheduled, but she needs to go to see her daughter’s play performance before the play starts in the early evening. Therefore the AI needs to know whom her daughter is, what is the play details, exact time and location of the re-scheduled meeting, and the predicted traffic and time between the office and the theater. All these information have to be accessed and informed layer by layer, to be able to fully analyzed in context so that the AI can help user make better decision. Meanwhile the AI got to deal with error handling, trouble shooting and fault tolerance issues, or even lack of relevant information across the apps and data, to ensure the quality of suggestion.

In this demo, like Craig says that understanding this kind of personal context is truely essential, for delivering truely helpful intelligence. One-time request might be easier to process and analyze (check Apple’s website), but a series of required or suggested actions that are coupled with critical information are scattered around and are hard to be parsed or prioritized in such complex system.

Personal context sythesis

Let’s move on to the next demo. The next demo after Craig shows more on Siri’s capabilities and experience of interacting with it, such as asking the weather, setting an alarm, tutorial of writting a message, etc. Again, most of these scenarios are more around one-time request, which are not necessarily requiring too much “memory“ to store and analyze the information needed. In other words, it hasn’t genuinely become an AI assistant that KNOWS you like your personal “Jarvis”. If there is a person who is watching over you typing all the time and can be accessed to the internet, she might be able to give you way much better answer than any AI. So the key relies on the context, which encompasses all your history and pattern on the smartphone. Pretty creepy, right?!

However, the next demo does catch my eye. The speaker plans to pick her mom from the airport, so she asks the time of her mom’s flight landing will be. If the prompt stops here, it will be no different compared with other cases above. But then the speaker asks “what’s our lunch plan?”, which slightly indicates a series of events that will be very likely taking place one after another. And by that, she keeps asking how long will it take us to get there from the airport, so the intention behind the specific time and location inquery got to be well-fed into the AI system to come up with a satisfied suggestion in response. No need to jump from Mail to Messages to Maps for user. She can even get an Uber to pick her mom if that’s what she usually does.

Cross-reference apps by Siri

design planning

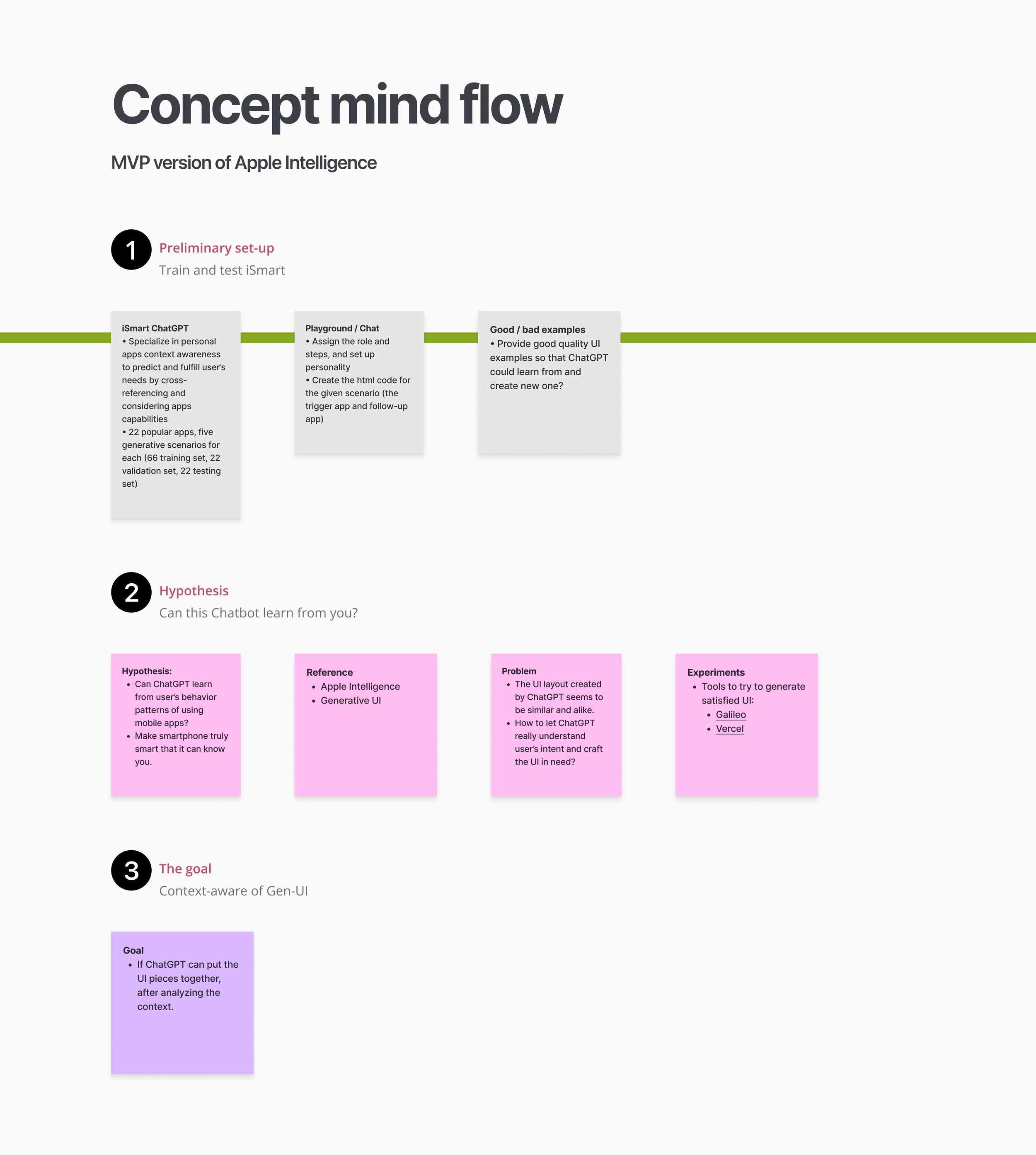

When ChatGPT became available during this project, the objective was to develop an intelligent chatbot similar to Apple's Intelligence initiative. The chatbot would provide information tailored to my needs based on prior behavioral patterns and history. Additionally, it would generate a user interface that anticipates and addresses my upcoming requirements—essentially serving as a minimum viable product (MVP) version of Apple Intelligence.

To support this effort, I created two AI chatbots within ChatGPT: one named iSmart and the other AI-fred. Each chatbot assumes distinct roles in my project workflow. Below is an overview of my initial conceptual framework for this idea:

The primary objective is to determine whether the generative UI (Gen-UI) can function as a context-aware, adaptive system that responds to user behavior patterns.

Step 1—Verify the concept of intelligent learning

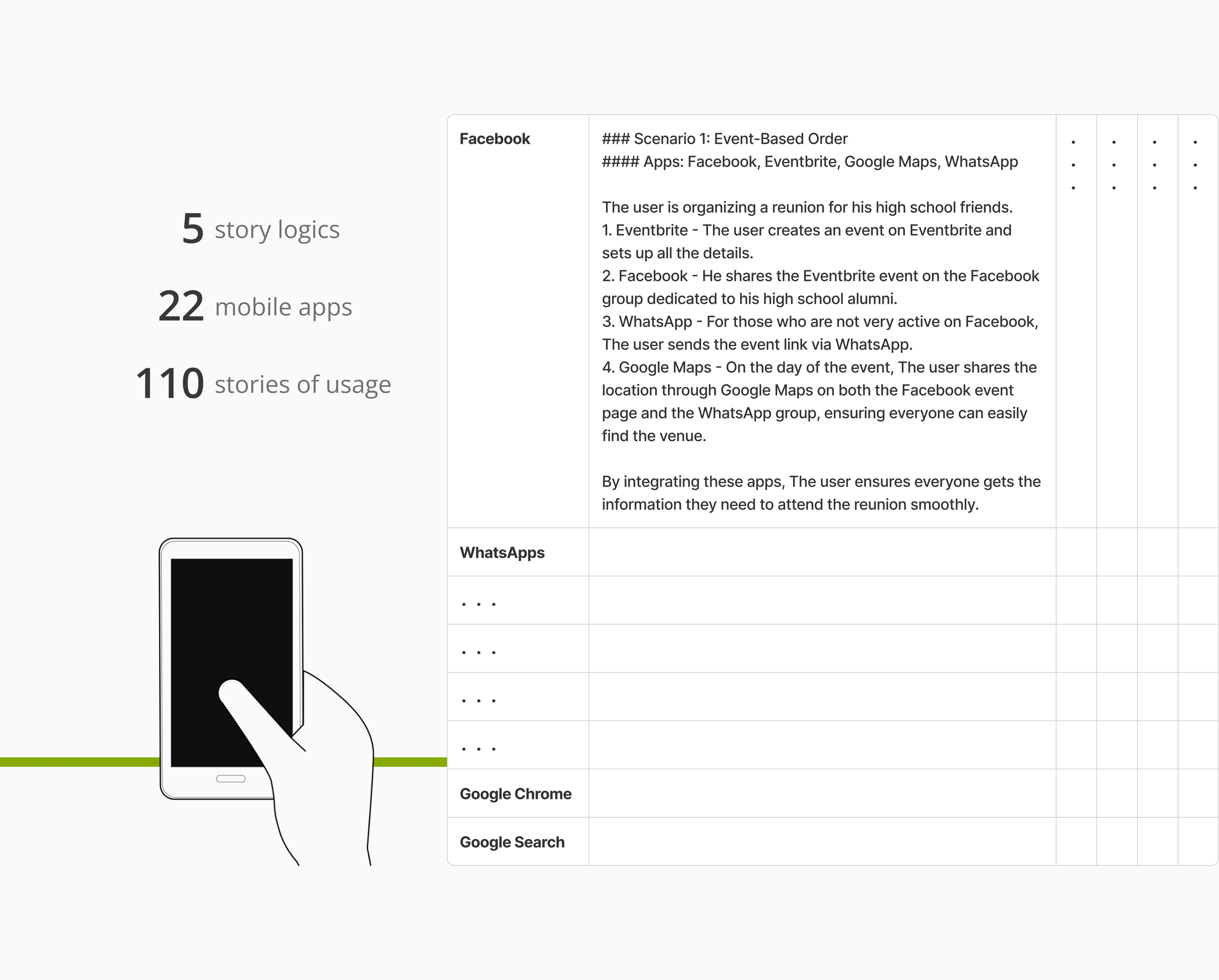

Firstly, I utilize ChatGPT to generate synthesized data for subsequent use and training, serving as a unified source of truth that reflects an individual's behavioral patterns. I provide a list of 22 popular mobile applications and instruct ChatGPT to create various scenarios involving no more than five of these apps, forming common and plausible narratives.

To enhance the storyline by demonstrating the usage of these apps in sequence, I also ask ChatGPT to generate scenarios based on five different ordering methods: event-based order, thematic order, chronological order, geographical order, cause-and-effect order, and problem-solution order. These techniques are commonly employed to develop coherent stories, and by applying them, we aim to minimize bias and avoid a one-sided perspective as we can.

In total, 110 stories are generated, of which 66 are allocated for training, 22 for validation, and 22 for testing purposes.

Assumptions

Prior to proceeding, several disclaimers should be explicitly addressed:

The applications included in the generated scenarios are not confined to the 22 listed apps. This flexibility allows for creative possibilities in scenarios developed by the AI.

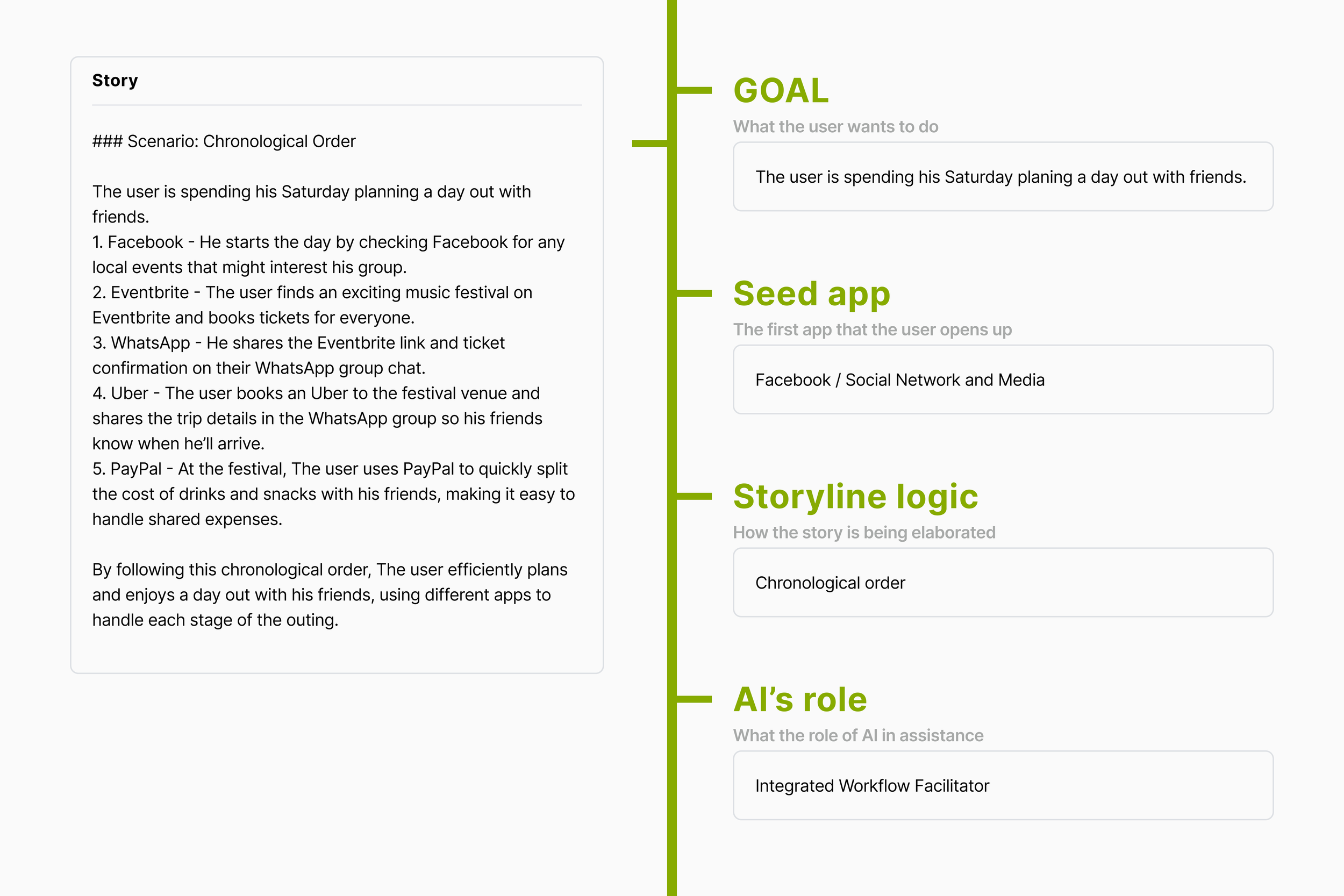

The initial app provided by the user in each scenario serves as the seed app and is supplied to the model. The AI then generates a sequence of app usages based on this input.

Essentially, the AI responds once the user initiates the first action with a specific intention, enabling the AI to follow through on the user’s desired objective.

For instance, if the user’s goal is to work from home while managing productivity and meals, the app they might first use is Google Calendar to review their schedule and break times.

A similarity score is employed to evaluate the correspondence between the original test set and the generated scenario. Scores above approximately 0.75 are considered indicative of a highly relevant generated scenario.

Ideally, no more than four apps are used within a single scenario, with five apps as the absolute maximum. The narrative typically progresses through the following sequence: Seed app → Triggered app → Third chained app → ... → Ending app.

story anatomy

A story is essentially a sequence of actions involving applications, without any underlying analysis. For instance, a person plans a day out with friends on Saturday to attend a festival, purchase drinks, and potentially have dinner together. Without additional context, this is simply a straightforward account of the person's activities. To enable smartphone AI to gain deeper insights into user behavior and identify meaningful data points for future use, I incorporate an additional analytical layer in each story: the user's goal and the AI's role.

The goal can tell the AI what the user is intended to do, while the AI’s role can define what’s the next suggested move that the AI is going to make. These two factors are the critical elements in the middle of AI processing the story context and the result of the response in UI.

Similarity score of testing set

After reviewing the training and validation sets—albeit limited in size—we are now entering the final testing phase of the iSmart chatbot. This stage presents particular challenges in AI evaluation. We assign tasks to the chatbot and measure the similarity score between its generated responses and our test set scenarios. For each user input, we provide the AI with relevant information, including the objective, the associated application, and the story order attribute. Out of 22 scenarios, only 5 scored below 0.75, which indicates a strong performance for iSmart. This suggests the model has developed a reliable understanding of the user behavior patterns we aim to replicate. Moving forward, we will select the top six scenarios to advance into step two, which is the highly anticipated generative UI development phase.

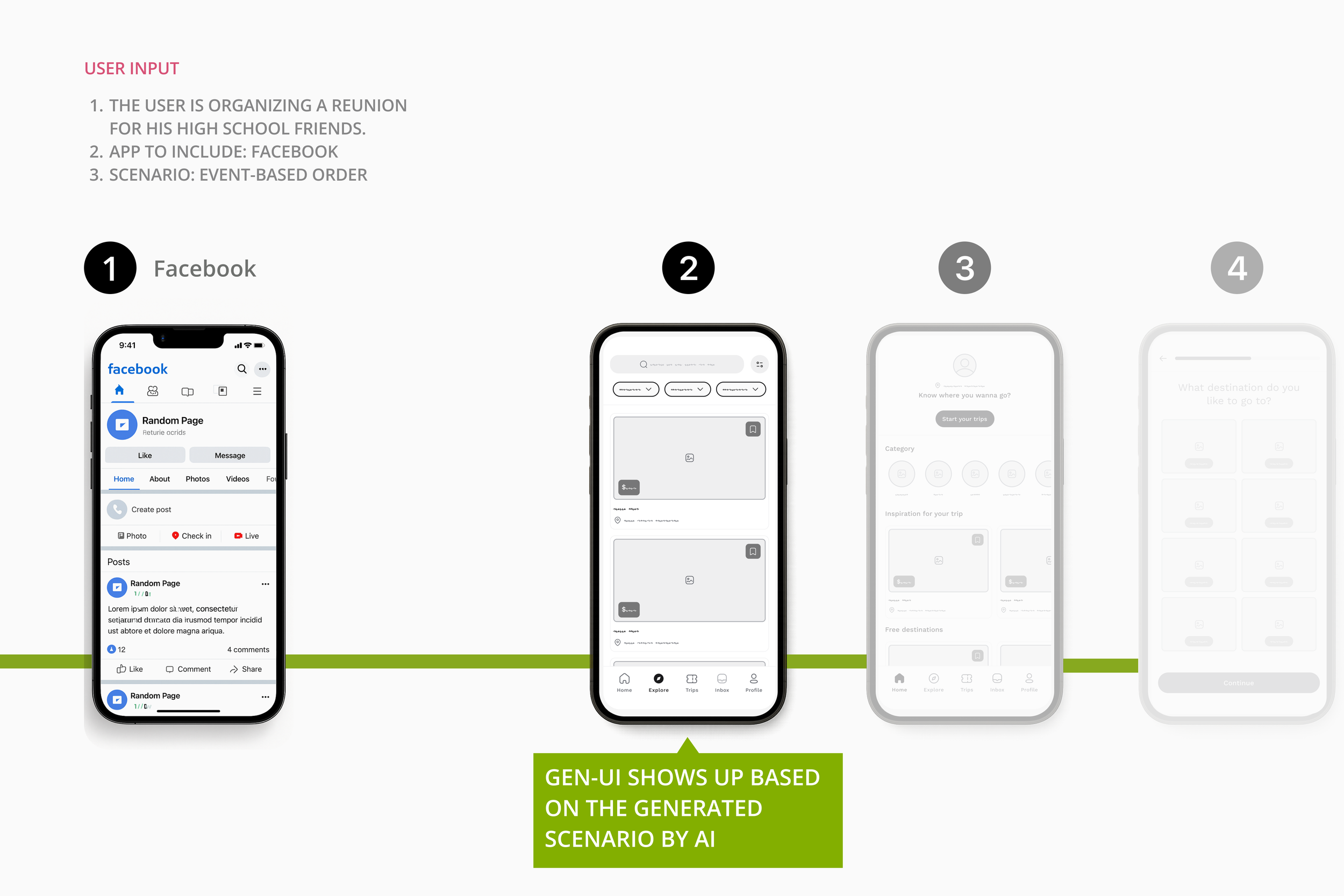

step 2 —Gen-UI prompting

In step 2, I develop an additional chatbot named AI-fred to assist with UI generation based on the provided scenario. The scenario includes a brief description of the user’s objective, the initial app that initiates the task flow, and the secondary app prepared to handle the current situation. AI-fred is designed to display the relevant contextual UI on the user’s phone screen accordingly.

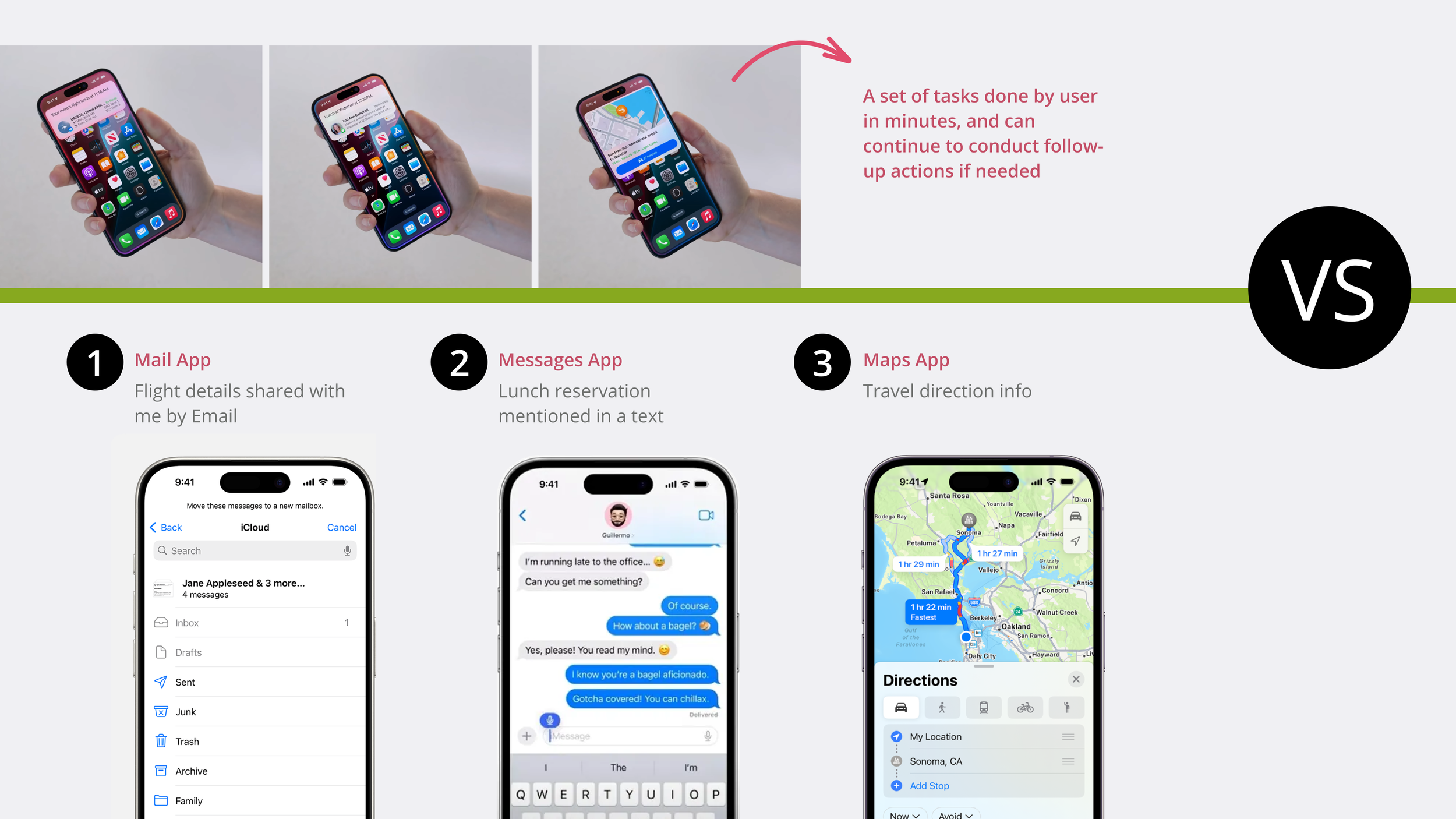

In the Apple Intelligence demo video, the UI dynamically appears on the screen in sequence, adapting to different contexts. For example, if it is time to pick someone up at the airport, flight information is displayed. If a lunch reservation has been made, the restaurant’s name appears as a reminder. Meanwhile, during the journey to the airport, GPS navigation shows the estimated driving time.

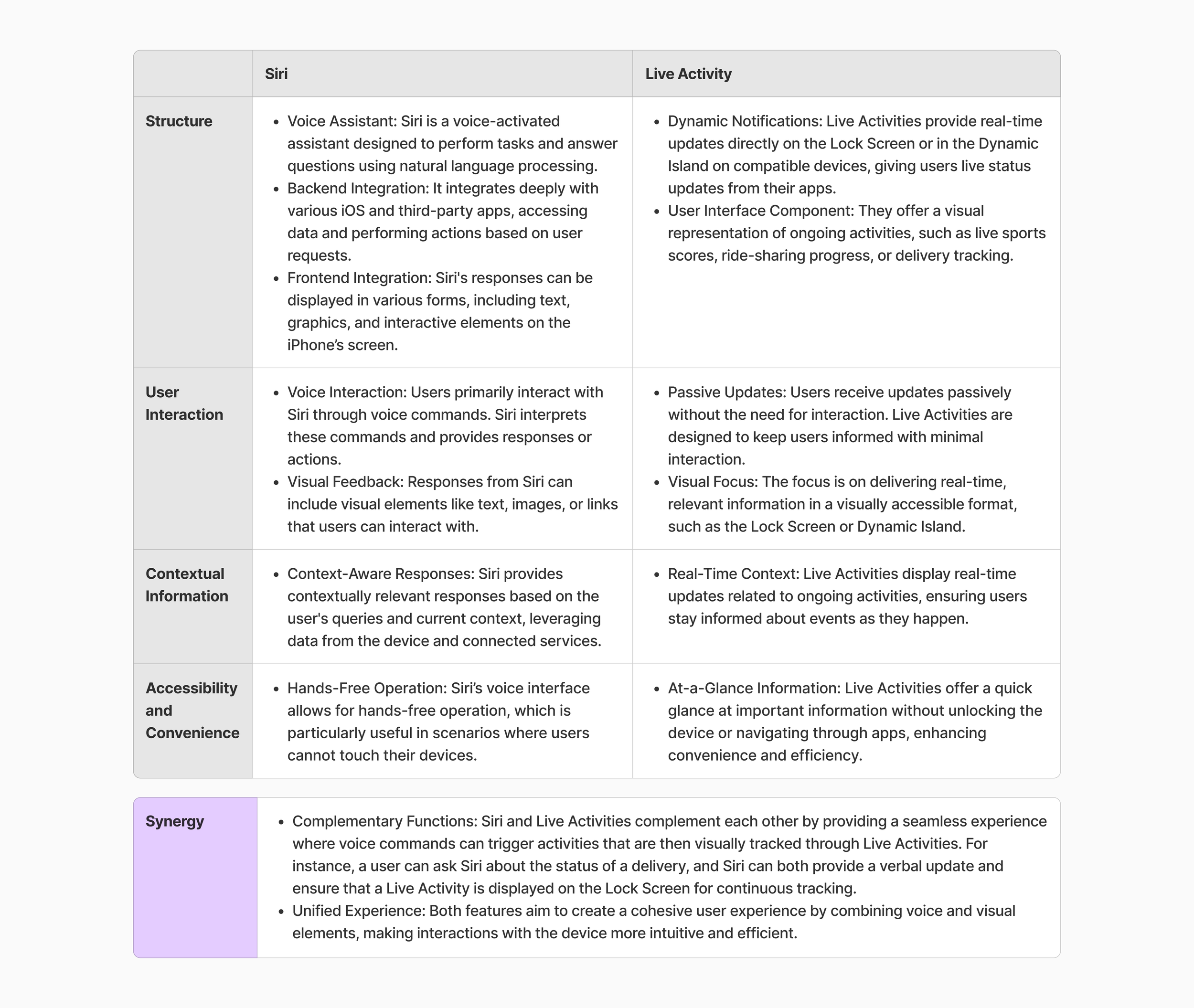

Before we move on, let’s see these how two design patterns: Siri and Live Activity, in the iOS system behave, which are acting like a smart assistant for our Gen-UI design candidate:

Apple does not appear to disclose the specific module it employs for Apple Intelligence, although the functionalities of the two are quite similar. Nevertheless, these systems can complement each other by integrating their strengths to create a seamless and consistent user experience, as outlined in the Synergy section above. The full prompt is shown below:

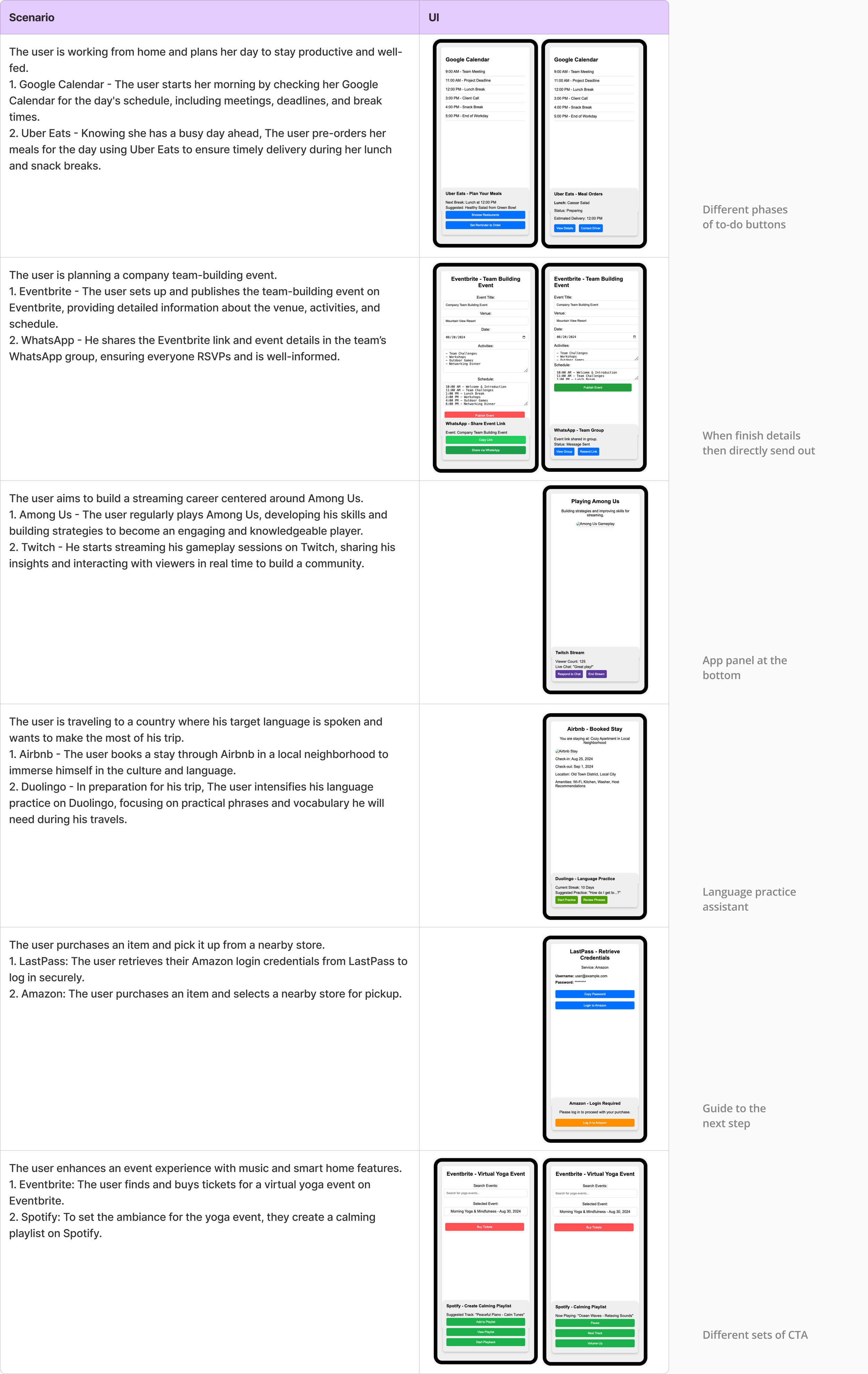

I ask AI-fred to generate HTML code for me so that I can see how the Gen-UI looks like, where it meets my expectation, or lacks some nuances or miss the point. Down below you can see the Gen-UI at the right side column that speaks to the scenario at the left hand side column.

Several key insights regarding the app user experience can be drawn from the Gen-UI design illustrated above:

The AI anticipates factors that may impact a user’s productivity during a busy day, offering a food delivery plan that allows users to browse options, set reminders, and track their orders.

The AI operates with a forward-looking approach, enabling it to provide timely actions when necessary.

Prior to full integration and setup, users must manually link their preferred apps. For instance, users may choose not to use Twitch for live streaming, necessitating customized app connections.

Users are unlikely to open the Airbnb app before their trip begins, so carefully timed prompts from Duolingo can enhance engagement at appropriate moments.

Displaying two Amazon login options may appear confusing; after successful login, the smart panel is intended to become the primary interface.

The music playlist pre-setting feature can be contextually linked to the user’s time and location to enhance personalization.

Another noteworthy observation is that the UI design of these smart panels at the bottom appears to follow a similar layout pattern. However, I believe it would benefit from being more contextually adapted to the specific situation or user needs.

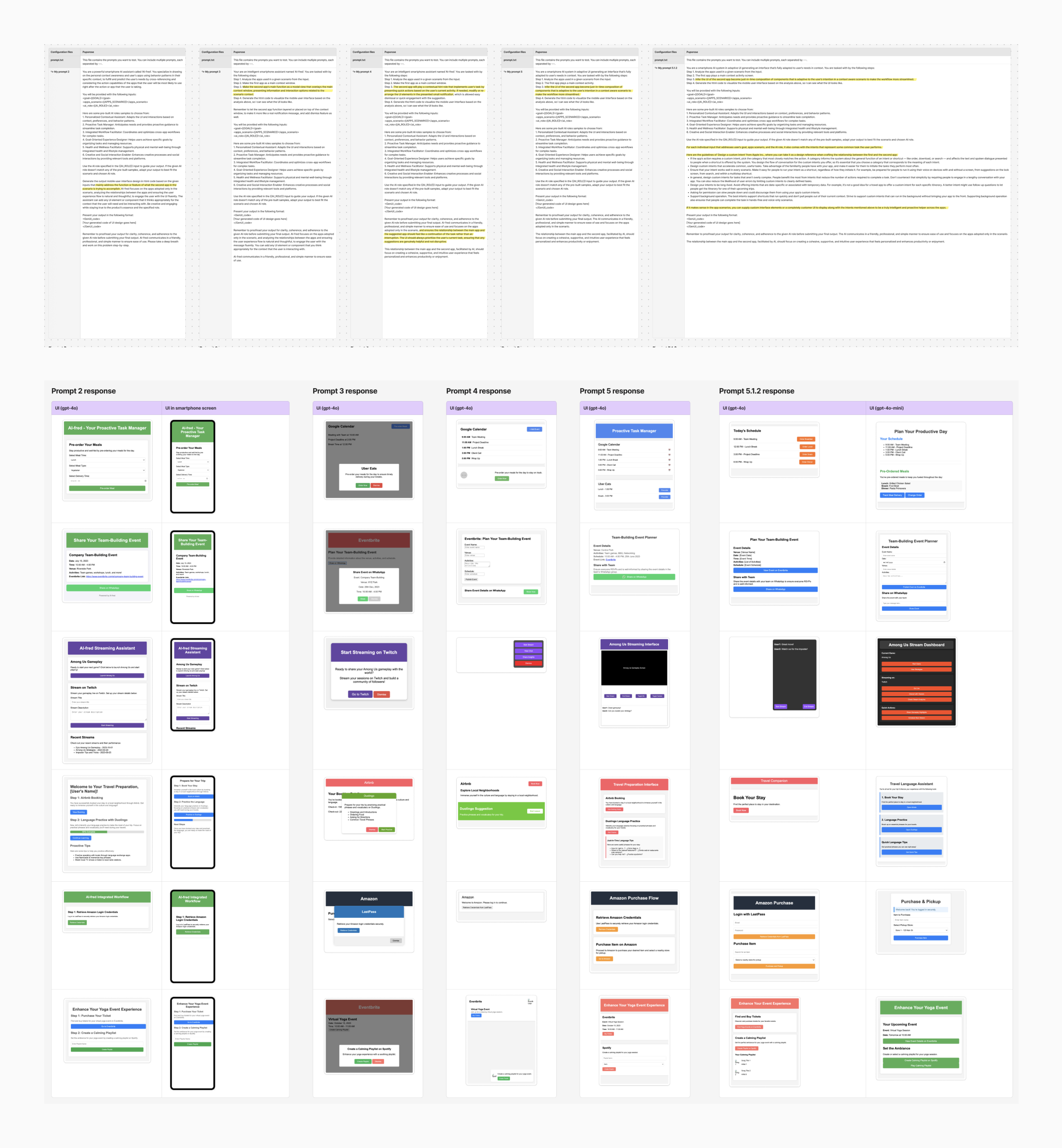

Step 3 —Run Eval: Promptfoo

I established and configured the evaluation process in Promptfoo using a Colab environment. The evaluation question was defined as follows: “Does this UI design effectively meet user needs within the given context while adhering to the AI role?” I incorporated 22 test scenarios into the evaluation set. After conducting multiple rounds of evaluation, I analyzed the results and iteratively refined the prompts until the desired performance was achieved. The eval results for the prompt looks like the one below:

I refine the prompts multiple times with the objective of determining whether AI can generate a user interface that not only meets user requirements but also adapts dynamically to the context, rather than simply producing a uniform template to populate the UI. Simultaneously, I reviewed Apple's HCI Guidelines and incorporated them into the prompt to enhance the specificity and clarity of the instructions, aligning with our objective to develop the MVP version of Apple Intelligence. Below is a snippet of the prompt and the generated UI iterations.

Details comparison—AI’s role is key

Occasionally, we are uncertain of our exact desires until AI presents them to us. Among these generative UI designs, although the core concepts are generally consistent, different prompts produce varying interfaces and prompt different actions. In the first scenario, I designate the AI as a proactive task manager with access to my calendar, enabling it to organize my day to maximize productivity. Additionally, by recognizing a busy schedule ahead, the AI is able to assist with placing food orders in advance.

Simply displaying a Call to Action is insufficient. It is more effective to present the core content directly. Instead of asking, “Would you like to open Google Maps?”, it is preferable to immediately show the map and directions and begin navigation. AI should anticipate subsequent steps to reliably fulfill the role assigned to it. In my humble opinion, this exemplifies the behavior of a truly intelligent AI.

Several factors must be carefully considered when attempting to integrate this AI feature into smartphones. These include the design of the prompt, the assigned instructions and roles, the implementation of a dedicated and consistent user interface, and the AI model itself. During the AI Product Management Certification course with OpenAI, the instructor highlighted several key recommendations. It is understandable why a truly intelligent smartphone has yet to be realized. Currently, the most practical approach is to simply enable LLM-powered AI functionality on mobile devices. Users are likely to access ChatGPT more frequently than activate AI assistants directly on their phones, despite these assistants being inherently available on mobile devices. The instructor highlighted five key points worth noting:

Consider the ongoing and cyclical nature of user engagement with these features.

Existing pain points may not be sufficiently severe, posing a risk of the features being perceived as generic.

There are challenges in maintaining consistent user engagement and effectively communicating the AI’s learning progress to users.

The testing and validation processes are expected to be complex.

Sustaining user interest over time requires balancing engagement to avoid fatigue, which is crucial for the continued success of new features.

Wrap Up

Large language models (LLMs) have already demonstrated powerful and impressive capabilities across numerous fields. To effectively leverage them as tools for addressing and solving user problems, it is essential to clearly define their roles, objectives, scope, purposes, and expected outcomes. Any ambiguity or confusion in these areas can create barriers that hinder widespread adoption and viral growth. Nonetheless, I am confident that truly intelligent smartphones will become a reality, particularly as their capabilities evolve through seamless integration of software and hardware. It is unreasonable to expect such a compact device to remain unaware of my favorite restaurants and foods after I have shared that information countless times.